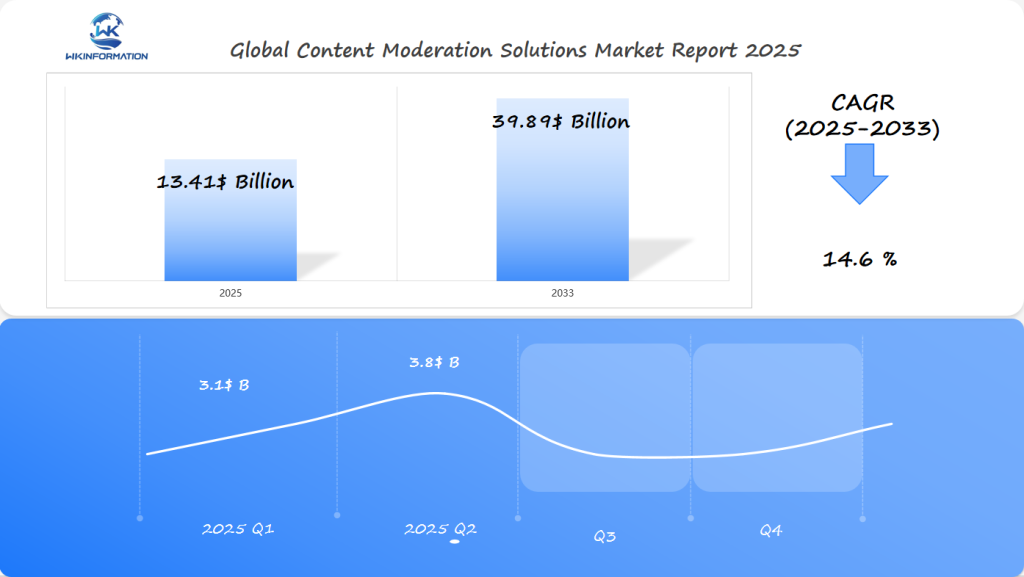

Content Moderation Solutions Market to Cross $13.41 Billion by 2025 with Compliance Pressure in the U.S., Germany, and India

Explore the growing Content Moderation Solutions Market expected to reach $13.41 billion by 2025, driven by increasing regulatory compliance demands and content safety requirements.

- Last Updated:

Content Moderation Solutions Market Outlook for Q1 and Q2 2025

The Content Moderation Solutions market is forecast to reach $13.41 billion in 2025, with a CAGR of 14.6% from 2025 to 2033. In Q1 2025, the market will likely reach approximately $3.1 billion, driven by the growing need for platforms, social media networks, and online communities to ensure the safe and responsible sharing of content. The U.S., Germany, and India are expected to lead the market, with increasing regulatory pressures and rising concerns over online content safety.

By Q2 2025, the market is projected to grow to about $3.8 billion, as demand for artificial intelligence and human-powered moderation services rises. In the U.S., the push for stricter content regulations and the need for brands to maintain brand safety will continue to drive demand for moderation solutions. In India and Germany, growing internet penetration and the popularity of social media platforms will also lead to a surge in the need for effective moderation strategies to curb harmful or inappropriate content online.

Content Moderation Solutions market chain from upstream to downstream

The content moderation solutions market is a complex mix of tech and service. It helps businesses understand the digital content world better.

The content moderation market chain has many important parts. It connects providers with services. This network makes sure digital content is checked well on different platforms.

Key Players in the Content Moderation Ecosystem

The ecosystem has key players for good content management:

- AI technology developers

- Machine learning engineers

- Human moderation specialists

- Platform security teams

- Regulatory compliance experts

Evolution of Content Moderation Technologies

Content moderation tech has changed a lot lately. Upstream providers use advanced AI for smart screening. This helps downstream services a lot.

| Technology Generation | Key Characteristics | Moderation Approach |

| First Generation | Manual Review | Human-only screening |

| Second Generation | Rule-based Filtering | Keyword and pattern detection |

| Third Generation | AI-Powered Moderation | Machine learning and predictive analysis |

Now, upstream providers make intelligent filtering systems. These systems help downstream services handle lots of digital content fast and accurately.

Key trends in AI-driven and manual Content Moderation services

The world of content moderation is changing fast. New tech and the growth of digital spaces are driving these changes. Companies are looking for ways to handle online content well, using tech and human insight together.

AI moderation is becoming a key tool for dealing with lots of digital content. Machine learning can spot:

- Inappropriate visual content

- Hate speech patterns

- Potential misinformation

- Cyberbullying indicators

Advancements in Machine Learning for Content Moderation

New technologies in natural language processing and computer vision have greatly enhanced AI moderation. Deep learning models are now capable of understanding context, nuances, and cultural differences more effectively than before.

The Role of Human Moderators in Complex Decision-Making

AI offers scalable solutions, but human moderators are key for tricky content. Hybrid approaches that mix AI with human skills are now the best way to moderate content.

Manual moderation is great at solving problems that AI might miss. Human moderators bring:

- Cultural understanding

- Emotional intelligence

- Ethical decision-making capabilities

“The future of content moderation lies in seamless collaboration between AI technologies and human expertise.”

More companies are using a mix of AI and manual review. This hybrid method creates better and more detailed ways to manage content.

Challenges related to regulation and platform liability

Digital platforms face big challenges in managing content and their legal duties. The world of online content moderation is changing fast. This creates tough legal and ethical problems for tech companies.

Free speech and controlling content are big issues for digital platforms:

- Navigating diverse international legal frameworks

- Protecting user rights while maintaining platform safety

- Implementing consistent content moderation policies

- Balancing technological solutions with human oversight

Navigating Complex International Regulations

Different countries have different rules for online content. Platforms need flexible plans that follow local laws but keep operations consistent worldwide. As laws become more complex, the responsibility of platforms becomes more intricate.

Balancing Free Speech and Content Control

Digital platforms find it hard to balance protecting user speech and stopping harmful content. Good content moderation needs smart tech and careful human checks.

The challenge lies not in eliminating all controversial content, but in creating intelligent, context-aware moderation systems.

New tech like AI and machine learning might help solve these problems. These tools let platforms create smarter moderation plans. They respect laws and user rights better.

Global geopolitical concerns over digital content control

The world of digital content moderation is a complex battlefield. It mixes international politics with digital control strategies. Countries are trying to manage online information while protecting their interests.

Cross-border moderation is a big challenge for digital platforms and governments. Different places have different views on content regulation:

- Western democracies focus on free speech

- Authoritarian regimes control the narrative

- New digital markets aim for balanced rules

Understanding International Digital Borders

Controlling digital content has become an important aspect of global politics. Governments view online platforms as a means to influence public opinion and national discussions.

“Content moderation is no longer just about filtering inappropriate material—it’s about managing global information ecosystems.”

Geopolitical Tensions Shaping Content Policies

The combination of technology and international relations makes moderation complicated. Platforms have to find a way to balance diplomatic requirements with content policies that honor various cultures and political systems.

Handling cross-border moderation requires a thorough understanding of local problems, laws, and cultural beliefs.

Segmentation of Content Moderation Solutions by service type

The content moderation solutions market keeps growing, offering different ways to keep digital spaces safe and compliant. Service segmentation is key for companies wanting to manage content well.

Companies now see how complex digital content screening is. They need smart strategies for different platform needs. The market has three main service types:

- AI solutions for automated content filtering

- Human moderation services for nuanced context evaluation

- Hybrid approaches combining technological and human expertise

AI-Powered Moderation Solutions

AI has transformed content moderation by making it quick and scalable. Machine learning algorithms can rapidly analyze large volumes of digital content, identifying potential issues with high precision.

Human Moderation Services

Even with AI, human moderation is still vital. It helps understand complex cultural and contextual nuances. Trained moderators catch things AI might miss, making content evaluation more thorough.

Hybrid Moderation Approaches

The best content moderation now mixes AI with human moderation. This combo uses AI’s speed and human insight’s depth. It makes a strong and flexible screening system.

Industry-specific applications in media, education, and social platforms

Content moderation solutions have changed how we use digital platforms. Now, companies use advanced tech to manage online content. This keeps users safe and platforms trustworthy.

Different fields use their own ways to handle content moderation. They tackle unique problems and needs:

Social Media Moderation Strategies

Social media moderation is key for online safety. Platforms use AI tools to:

- Find and remove harmful content

- Keep user privacy safe

- Stop cyberbullying

- Reduce legal risks

Educational Content Filtering

Schools use content filters to keep learning spaces safe. Effective screening tools block inappropriate content while allowing students to access important educational materials.

Media and Entertainment Industry Solutions

The media industry requires intelligent content moderation for user-generated content. Streaming platforms and digital media outlets employ comprehensive moderation techniques to strike a balance between artistic expression and community guidelines.

Advancements in technology continue to enhance content moderation in these critical areas, ensuring that digital environments remain secure, constructive, and enjoyable for all users.

Worldwide market landscape for Content Moderation Solutions

The global market for content moderation solutions is rapidly evolving due to the rise of digital platforms and intricate online environments. As businesses expand their digital risk management efforts, we can expect growth in various sectors.

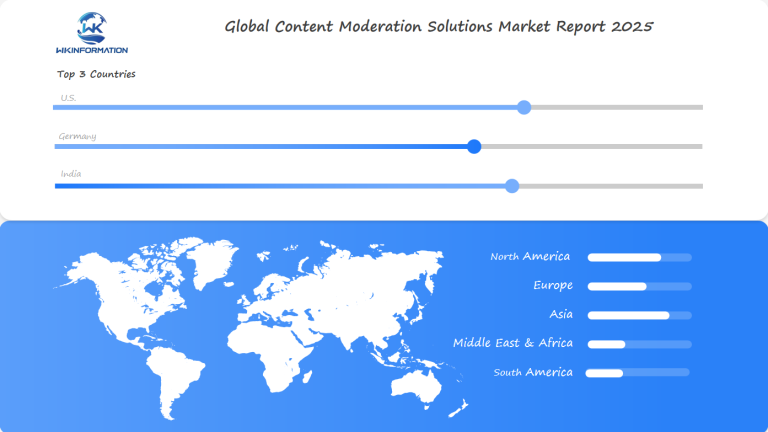

Regional Trends in Content Moderation Solutions

The content moderation solutions market exhibits intriguing regional patterns. According to market research, there are significant variations in how different regions adopt these solutions.

Regional Market Shares and Growth Projections

Here are some key points about regional markets:

- North America leads with about 34% of market revenue

- Asia-Pacific is growing fast

- European markets are integrating technology steadily

Emerging Markets in Content Moderation

Emerging markets are changing the global content moderation scene. Countries like India, Brazil, and Southeast Asia are seeing huge growth in digital platforms. This opens up big chances for content moderation tech.

The emerging markets bring new challenges and creative solutions. They’re pushing tech forward and making the global market more diverse. These areas are finding smart ways to handle digital content, keeping cultural and legal needs in mind.

U.S. market focus on legal compliance and platform safety

The U.S. market for content moderation solutions is crucial in addressing issues faced by digital platforms. Legal regulations and ensuring the safety of these platforms are primary concerns for technology companies. Content moderation strategies play a critical role in maintaining secure online environments.

American tech platforms are required to establish robust content moderation practices in order to safeguard users and comply with legal requirements. Additionally, they must explore innovative approaches to managing risks in the digital landscape.

Impact of Section 230 on Content Moderation Practices

Section 230 of the Communications Decency Act is vital for online content rules. It shields digital platforms, letting them manage content without full legal blame.

- Protects platforms from liability for user content

- Enables flexible content moderation strategies

- Supports innovation in digital communication

Initiatives to Combat Online Harassment and Misinformation

Tech firms are creating advanced methods to fight online dangers. Proactive content moderation is key to making digital spaces safer.

| Moderation Strategy | Key Focus Areas | Implementation Approach |

| AI-Powered Filtering | Hate Speech Detection | Machine Learning Algorithms |

| Human Review Panels | Context-Based Evaluation | Expert Content Analysts |

| Community Guidelines | User Behavior Monitoring | Automated Reporting Systems |

The US market keeps growing, with safety on platforms being a major focus for digital companies. They aim to keep user trust and follow the law.

Germany’s regulatory approach and content governance

Germany has made significant changes in how it moderates online content. The country has implemented strict regulations to address issues related to online content. At the forefront of these efforts is the Network Enforcement Act (NetzDG).

NetzDG plays a crucial role in regulating online content. It places an obligation on social media platforms to respond promptly to harmful content. This law mandates that they swiftly remove illegal content, thereby impacting their operations in Germany.

Key Aspects of NetzDG Implementation

- Requires social media platforms to remove illegal content within 24 hours

- Imposes substantial financial penalties for non-compliance

- Establishes clear reporting mechanisms for users

Digital platforms must now spend a lot on better content moderation tools. This has led to debates about how to balance free speech with controlling content.

“NetzDG sets a global precedent for responsible digital ecosystem management” – Digital Policy Experts

Impact on Content Moderation Technologies

The law has driven big changes in how content is managed. AI and machine learning are now key in making fast, accurate checks. These tools help meet NetzDG’s demands.

| Content Moderation Requirement | NetzDG Compliance Strategy |

| Illegal Content Detection | AI-powered screening algorithms |

| Removal Timeframe | Within 24 hours of notification |

| Penalty for Non-Compliance | Up to €50 million |

The German way of managing content is shaping global digital policies. NetzDG is seen as a key example for other countries to follow.

India’s digital expansion boosting Content Moderation needs

The digital world in India is growing fast, changing how we handle online content. With over 624 million people online, India is key for digital platforms. This growth brings both challenges and chances for better content moderation.

- Rapid smartphone penetration

- Increasing social media usage

- Diverse linguistic and cultural contexts

- Growing digital literacy rates

Rapid Growth of Social Media and Digital Platforms

Social media is growing fast in India. Research shows that managing online content is getting more important.

Challenges and Opportunities in the Indian Market

India’s online world faces unique challenges due to its many languages and cultures. To overcome these, platforms need a combination of intelligent AI and human intervention.

| Moderation Challenge | Potential Solution |

| Multilingual Content | Advanced NLP Technologies |

| Cultural Sensitivity | Localized Moderation Teams |

| High Volume User-Generated Content | Scalable AI Moderation Tools |

As India’s digital landscape continues to expand, there are significant opportunities for those who can develop intelligent, culturally sensitive moderation solutions.

Innovation and future scalability of Content Moderation technologies

The world of content moderation is changing fast thanks to new tech. Digital platforms are working hard to keep up with the huge amount of online content. They want to keep their sites safe and users happy.

New tech trends are changing how we handle content moderation:

- Advanced natural language processing algorithms

- Sophisticated computer vision technologies

- Machine learning-driven contextual analysis

- Real-time content filtering systems

Advancements in Natural Language Processing

Natural language processing is making a big difference in content moderation. It helps understand digital messages better than ever before. Now, systems can catch subtle meanings, sarcasm, and complex language with great accuracy.

Scalable Solutions for Increasing Content Volumes

Future content moderation will focus on highly scalable solutions for big content volumes. AI platforms are getting smarter, learning and improving their detection skills all the time.

Scalability strategies include:

- Distributed computing architectures

- Cloud-based moderation infrastructure

- Adaptive machine learning algorithms

- Hybrid human-AI moderation approaches

Companies are putting a lot of money into new tech for content moderation. They aim to create strong, flexible systems. These systems will handle the fast-changing digital world while keeping users safe and trusting.

Competitive players and service providers in the Content Moderation industry

The content moderation market is now a fast-paced competition. Big tech companies and specialized firms are fighting for a bigger piece of the pie. Google, Meta, and Microsoft lead with their advanced moderation tools.

- Alphabet Inc. – USA

- Microsoft Corporation – USA

- Accenture plc – Ireland

- Amazon Web Services Inc. – USA

- Besedo Ltd. – Switzerland

- Open Access BPO – Philippines

- Clarifai Inc. – USA

- Cogito Tech LLC – USA

- Appen Limited – Australia

- Genpact Limited – USA

Overall

| Report Metric | Details |

|---|---|

| Report Name | Global Content Moderation Solutions Market Report |

| Base Year | 2024 |

| Segment by Type |

· AI Solutions · Human Moderation · Hybrid Approaches |

| Segment by Application |

· Social Media · Education · Entertainment |

| Geographies Covered |

· North America (United States, Canada) · Europe (Germany, France, UK, Italy, Russia) · Asia-Pacific (China, Japan, South Korea, Taiwan) · Southeast Asia (India) · Latin America (Mexico, Brazil) |

| Forecast units | USD million in value |

| Report coverage | Revenue and volume forecast, company share, competitive landscape, growth factors and trends |

The content moderation solutions market is poised for significant transformation and growth through 2025, driven by increasing regulatory pressures, technological advancements, and evolving digital safety requirements. The projected market value of $13.41 billion reflects the critical importance of content moderation across industries and geographies.

Several key factors shape this market’s trajectory:

- The integration of AI and human moderation capabilities is creating more effective hybrid solutions

- Regulatory frameworks across the US, Germany, and India are driving adoption and innovation

- Growing digital content volumes necessitate scalable, intelligent moderation approaches

- Geopolitical factors increasingly influence content moderation policies and implementation

The market faces important challenges around balancing free speech with content control, navigating complex international regulations, and addressing cultural sensitivities. However, technological advances in AI, machine learning, and natural language processing are enabling more sophisticated and nuanced moderation capabilities.

As digital platforms continue to expand globally, the demand for robust content moderation solutions will likely accelerate. Success in this market will require solutions that can effectively combine automated systems with human oversight while adapting to evolving regulatory requirements and user expectations.

The future of content moderation lies in developing scalable, culturally-aware solutions that can protect users while preserving open dialogue across digital spaces. Companies that can deliver these capabilities while navigating the complex regulatory landscape will be well-positioned in this growing market.

Global Content Moderation Solutions Market Report (Can Read by Free sample) – Table of Contents

Chapter 1: Content Moderation Solutions Market Analysis Overview

- Competitive Forces Analysis (Porter’s Five Forces)

- Strategic Growth Assessment (Ansoff Matrix)

- Industry Value Chain Insights

- Regional Trends and Key Market Drivers

- Vacuum Arc RemeltingMarket Segmentation Overview

Chapter 2: Competitive Landscape

- Global Content Moderation Solutionsplayers and Regional Insights

- Key Players and Market Share Analysis

- Sales Trends of Leading Companies

- Year-on-Year Performance Insights

- Competitive Strategies and Market Positioning

- Key Differentiators and Strategic Moves

Chapter 3: Content Moderation Solutions Market Segmentation Analysis

- Key Data and Visual Insights

- Trends, Growth Rates, and Drivers

- Segment Dynamics and Insights

- Detailed Market Analysis by Segment

Chapter 4: Regional Market Performance

- Consumer Trends by Region

- Historical Data and Growth Forecasts

- Regional Growth Factors

- Economic, Demographic, and Technological Impacts

- Challenges and Opportunities in Key Regions

- Regional Trends and Market Shifts

- Key Cities and High-Demand Areas

Chapter 5: Content Moderation Solutions Emerging and Untapped Markets

- Growth Potential in Secondary Regions

- Trends, Challenges, and Opportunities

Chapter 6: Product and Application Segmentation

- Product Types and Innovation Trends

- Application-Based Market Insights

Chapter 7: Content Moderation Solutions Consumer Insights

- Demographics and Buying Behaviors

- Target Audience Profiles

Chapter 8: Key Findings and Recommendations

- Summary ofContent Moderation Solutions Market Insights

- Actionable Recommendations for Stakeholders

Access the study in MULTIPLEFORMATS

Didn’t find what you’re looking for?

TALK TO OUR ANALYST TEAM

Need something within your budget?

NO WORRIES! WE GOT YOU COVERED!

Call us on: +1-866-739-3133

Email: infor@wkinformation.com

What is driving the growth of Content Moderation Solutions?

The market is growing fast. This is because of stricter rules, more digital content, and worries about safety online. These changes are happening in social media, schools, and media.

How do AI and human moderators work together?

AI and humans work together in moderation. Here’s how it works:

- AI checks content first: AI algorithms are used to scan and analyze content quickly. They can identify obvious violations of community guidelines, such as hate speech or explicit content, with high accuracy.

- Humans make tough decisions: While AI can handle many cases effectively, there are situations that require human judgment. Complex cases, nuanced discussions, or context-specific issues may be better understood by human moderators who can consider the bigger picture.

This team approach combines the strengths of both AI and humans, allowing for efficient moderation while still addressing complex content that requires human intervention.

What are the key challenges in content moderation?

Content moderation faces several significant challenges, including:

- Navigating international regulations and laws

- Striking a balance between free speech and preventing harmful content

- Understanding and respecting diverse cultural perspectives

- Finding effective solutions to manage the increasing volume of content

These challenges require careful consideration and strategic approaches to ensure that content moderation is fair, effective, and compliant with legal requirements.

How do different countries approach content moderation?

Countries have different ways of handling content. Germany has strict rules, the U.S. has Section 230, and India uses its own approach. Each country’s culture and language play a big role.

What technologies are advancing content moderation?

New technologies such as natural language processing and artificial intelligence are making significant advancements in content moderation. These tools have the capability to detect harmful content across various languages and platforms.

Which industries most heavily use content moderation solutions?

Social media, schools, media, and e-commerce use these solutions a lot. They do this to keep users safe, follow rules, and protect their image.

What is the projected market size for Content Moderation Solutions?

The market is expected to grow to $13.41 billion by 2025. This growth is due to more online use, strict rules, and the need for safety online.

How do content moderation solutions handle global diversity?

Solutions are getting better at understanding different cultures and languages. They aim to keep moderation standards the same everywhere.

What are the primary service types in content moderation?

The main types of services in content moderation are:

- AI moderation

- Human moderation

- A combination of both

This combination approach is effective in ensuring proper content management.

What are the emerging trends in content moderation?

New trends include:

- Better AI

- Understanding context

- Real-time moderation

There’s also a focus on complex content detection.